We appreciate your consideration of this write-up on Trainual Tags for your demands.

Trainual is a software application that makes it simple for groups to efficiently develop, manage and accessibility documentation. It’s made to satisfy the onboarding and SOP requirements of organizations, huge and little.

It likewise aids make certain that all new hires recognize and accept firm plans. The device is worth buying if you are looking for an effective method to handle your team and business procedures.

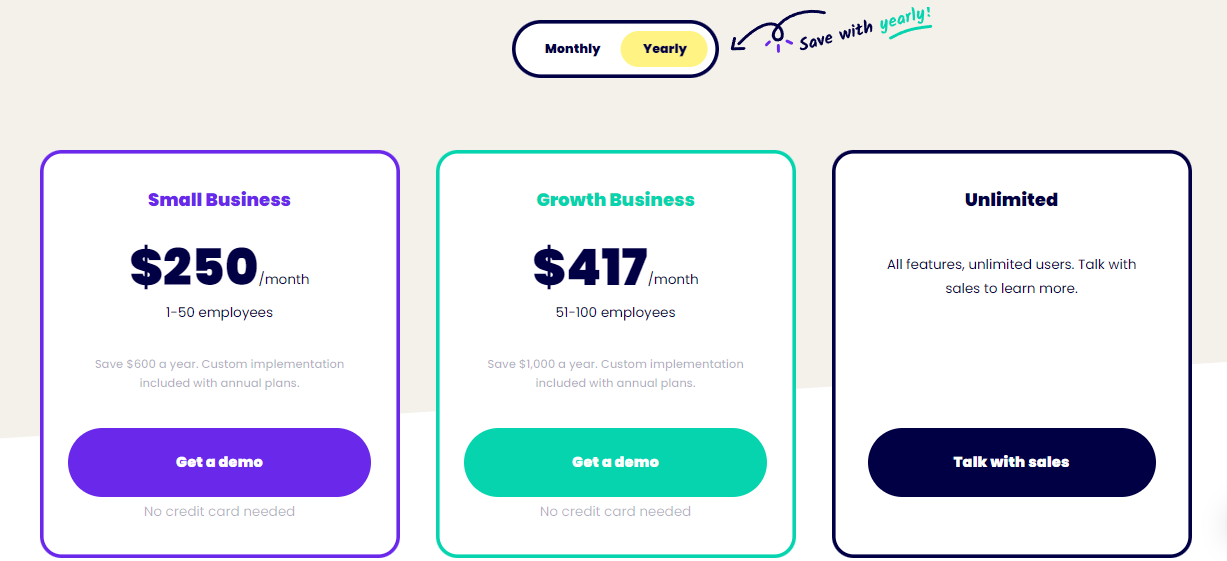

Prices

Trainual is a powerful device for small to tool services that helps them simplify their training and onboarding processes. It is an excellent option for business that require to conserve money and time while providing better worker involvement. The software application provides a thorough set of attributes, consisting of process documentation, SOP development and staff member onboarding. It likewise has a personalized control panel, progressed coverage and an inner communication function. It provides a variety of strategies, including a cost-free prepare for as much as five individuals.

Its rate is competitive and deserves the financial investment if you are trying to find a solution that will aid your business enhance productivity and reduce worker turn over. However, it may not be the very best option for smaller sized businesses or start-ups that have a tight spending plan. Additionally, it does not offer as much modification as other training software.

Unlike SweetProcess, which provides a single charge for its system, Trainual charges for the services it offers. This makes it tough for small companies to stay on top of the system’s charges. Additionally, it restricts functions based upon the plan chosen and costs for non-active workers. SweetProcess, on the other hand, does not bill for non-active individuals and gives its clients with a full-featured system. It is additionally easy to use and has superb consumer support. Its assistance team responds rapidly to questions and addresses problems promptly.

Functions

The platform offers a series of attributes that aid firms improve their operations and training processes. These consist of paper management, automated tracking and cooperation. It likewise provides customers with a search device that makes it easy to discover the info they require.

As an example, a salesman may require to understand the details of a particular product or service. Without an easy-to-access collection of resources, they could have to call their employee several times before they discover the info they need. With Trainual, nevertheless, they can easily navigate to the pertinent papers in a matter of minutes.

Furthermore, Trainual is an outstanding service for organizations that require to create a library of plans and procedures. The software’s easy to use interface allows you to create core systems that make certain everyone on the group has the very same expertise. It can even immediately press updates to the team, which conserves time.

Whether you’re a SEO company or a producing company, this software will make your company a lot more effective and lower expanding discomforts. Its attributes include arranged onboarding and SOP documents, a powerful online search engine and AI-assisted assistance. It additionally integrates with a selection of work applications, including Gusto and Rippling.

Support

Whether you’re producing a new process for your group or updating old ones, Trainual provides superb assistance to lead you with the entire process. They begin by setting up a telephone call with a committed implementation expert to discuss your certain objectives and demands for the system. After that, they’ll assist you create a list of stakeholders. These are usually department heads and other individuals that will certainly be heavily associated with the documents of your company’s procedures.

As soon as you have actually set up your account, you can start recording your firm’s interior treatments. The system has a straightforward, Google Docs-like editor for creating content and adding visuals. It additionally has a durable repository of layouts that can serve as faster ways to the paperwork procedure. You can personalize these themes to match your content extent. You can also assign Functions to customers, which enables you to identify which users will see particular records Trainual Tags.

In addition to sustaining its own software program, Trainual likewise has assimilations with third-party apps like Slack and Asana. This makes it less complicated for groups to gain access to crucial information and team up efficiently. However, it’s important to note that this platform requires a secure web link in order to work ideally. Otherwise, it can slow down your workflow. This is specifically real for large teams with several locations. However, fortunately is that Trainual offers a complimentary test so you can examine it before you invest in the system.

Conclusion: Trainual Tags

Trainual deals a simple, user-friendly and visually pleasing method to document business procedures. It has actually been the go-to device for countless services considering that its beginning in 2019. The company’s founder, Chris Ronzio, constructed and sold a nationwide event video manufacturing company prior to launching Trainual Tags. His key to success was streamlined training and systems in position driving repeatable outcomes.

The platform has a number of features that help companies develop smooth procedures and winning societies. For instance, it enables groups to promptly onboard new hires by developing a digital worker handbook. It likewise provides a main repository for important papers, like business files and policies. This aids to conserve time and resources by reducing the need to search through old e-mails and out-of-date Google Docs.

An additional function is the capacity to track jobs and designate them to specific staff member. This enables managers to keep an eye on what everyone is dealing with and guarantees that staff member are constantly in the loop. On top of that, Trainual can attach to other apps and solutions, such as Slack, Justworks, Gusto, Rippling and Zenefits.

This device is excellent for any type of kind of service that calls for a great deal of travel or has employees in various places. It helps them remain on top of their job and give the very best possible service to customers. For instance, a SEO firm can use Trainual to develop thorough workflows and training products for its workers. It can also be utilized by manufacturers to create step-by-step workflows for quality control and training on just how to troubleshoot issues.